To start off, let’s dive into a technique that can help make sure that time-series forecasts don’t go beyond certain boundaries. This can be incredibly useful in a variety of situations, from financial planning to inventory management. And don’t worry if you’re not a programming expert – we’ll also take a look at some Python code that you can use to implement this technique yourself. So let’s get started with this article “Ensure time series forecasts stay within limits” and explore this approach together!

Requirement of time series forecast to be within some specified range

It’s important to ensure time series forecasts stay within limits to avoid unexpected outcomes. Generally, it is common to want forecasts to be positive. Or, to require them to be within some specified range [a,b][a,b] e.g. count of students in a classroom can’t go beyond sitting capacity or marks of a student can’t be negative, etc. So, Both of these situations are relatively easy to handle using transformations.

The following are the steps:

- Let, the time series be “y”

- Transformed time series (yt): Transform y using some function like square, log, etc.

- Build a model on “yt”

- Forecast future values using the model. Let’s call the forecasted series “ytf”

- Reverse transform the forecasted values to bring back the values to the original scale.

Example:

Let the time series be “y” & transformation function be “Square-Function”.

y=[1,2,3,4,5]

So, transformed time series yt=y^2.

yt=y^2=[1,4,9,16,25]

Build a model on “yt” (i.e. y^2) and forecast values using the model.

Let the forecasted values be “ytf”.

ytf=[36,49,64]

Reverse transform the forecasted values “ytf” using reverse transformation. In this case, it is square root of “ytf”. Now, we have values in the original scale (Let reverse transformed values be “yf”).

yf=square root(ytf)=[6,7,8]

As can be seen, this was the journey from “y”([1,2,3,4,5]) to “yf”([6,7,8]).

Also, the transformation function was the “Square function” & reverse transform function was the “square root function”.

Scaled Logit Transform

This transformation helps us in dealing with strict limit requirements (Floor: Minimum possible values and Cap: Maximum possible value).

The idea here is to capture the property of the time series (i.e. it stays within a limit) using the scaled logit transform (It restricts the value to stay within limits).

To handle data constrained to an interval, imagine that the forecast was constrained to lie within the:

a=Floor & b=Cap

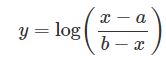

Then, we can transform the data using a scaled logit transform (Formula shown above) which maps (a,b) to the whole real line. Where x is on the original scale & y is the transformed data.

Python Code

# suppose, we have a data frame with a column 'y' as a time series

#df[‘y’] is a time series

# Value of Floor

floor = a

# Value of Cap

cap = b

df_F['y'] = np.log((df_F['y']-floor)/(cap-df_F['y']))This code is transforming the values of a time series data in column ‘y’ of a dataframe named ‘df_F’. The transformation involves taking the logarithm of a ratio of two values, with some adjustment made to the original values to ensure they are within the range of the desired floor and cap values.

Here’s a breakdown of the code:

floorandcapare variables that are assigned some values. These values likely represent the desired lower and upper limits for the transformed data.df_F['y']refers to the ‘y’ column of the dataframedf_F. This column is assumed to contain the time series data to be transformed.(df_F['y']-floor)subtracts the floor value from each value in the ‘y’ column ofdf_F.(cap-df_F['y'])subtracts each value in the ‘y’ column ofdf_Ffrom the cap value.(df_F['y']-floor)/(cap-df_F['y'])divides the adjusted ‘y’ values by the adjusted cap values, resulting in a ratio for each time point.np.log()applies the natural logarithm to each ratio value, which tends to be useful for compressing large ranges of data into smaller, more manageable values.

The overall effect of this code is to transform the ‘y’ time series data in df_F so that its values are logarithmically scaled and fall within the range defined by floor and cap.

So, we will build a forecasting model on the transformed column and reverse transform the forecast to bring back the values to the original scale.

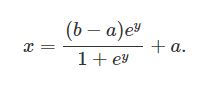

Scaled logit reverse transform

To reverse the transformation, we will use:

Python Code

range_fc=cap-floor

# forecast['yhat'] is the forecasted values saved in a data frame "forecast" & in a column "yhat"

exp_fc=np.exp(forecast['yhat'])

forecast['yhat']=(range_fc*exp_fc)/(1+exp_fc)+floorThis code is performing a back-transformation on forecasted values of a time series. The original data was transformed using a logarithmic scale to ensure it fell within a defined range, but for presentation or comparison purposes, the transformed data needs to be converted back to the original scale.

Here’s a breakdown of the code:

range_fcis the range of the original data, calculated as the difference between thecapandfloorvalues.forecast['yhat']is a column in theforecastdataframe that contains the forecasted values of the transformed data.exp_fccalculates the exponential of each value in theforecast['yhat']column, effectively undoing the logarithmic transformation applied earlier.(range_fc*exp_fc)/(1+exp_fc)applies a logistic transformation to the exponentiated values, which constrains them to fall within thefloorandcaprange. This is a common way of back-transforming data that has been log-transformed and restricted to a certain range.+flooradds back thefloorvalue to the back-transformed forecasted values, shifting them back to the original scale.

The result of this code is a dataframe forecast that contains the forecasted values of the time series in their original scale, after the log-transformation and range restriction had been applied earlier.

So, the reverse transform has been done to have all values in the original scale.

Points to consider while using this transformation technique

- Historical Min. & Max. values are possible in future

- The allowed range for CAP: Historical Max. Value to Infinity

- The allowed range for Floor: Historical Min. Value to Zero

- If you do not consider the above points, the formula gives you inconsistent results while transformation (For values x<=a & x>=b; because the natural logarithm function log(x) is defined only for x>0).

Conclusion

In many cases, time series data may have inherent upper and lower bounds, and traditional forecasting methods may not take these bounds into account. By and large, this article tries to shed some light on how to “ensure time-series forecasts stay within limits”. We discussed Scaled Logit Transform & Scaled Logit Reverse Transform. Overall, the article provides a useful technique for handling time series data with inherent limits and demonstrates how to implement it using readily available tools in Python.

I highly recommend checking out this incredibly informative and engaging professional certificate Training by Google on Coursera:

Google Advanced Data Analytics Professional Certificate

There are 7 Courses in this Professional Certificate that can also be taken separately.

- Foundations of Data Science: Approx. 21 hours to complete. SKILLS YOU WILL GAIN: Sharing Insights With Stakeholders, Effective Written Communication, Asking Effective Questions, Cross-Functional Team Dynamics, and Project Management.

- Get Started with Python: Approx. 25 hours to complete. SKILLS YOU WILL GAIN: Using Comments to Enhance Code Readability, Python Programming, Jupyter Notebook, Data Visualization (DataViz), and Coding.

- Go Beyond the Numbers: Translate Data into Insights: Approx. 28 hours to complete. SKILLS YOU WILL GAIN: Python Programming, Tableau Software, Data Visualization (DataViz), Effective Communication, and Exploratory Data Analysis.

- The Power of Statistics: Approx. 33 hours to complete. SKILLS YOU WILL GAIN: Statistical Analysis, Python Programming, Effective Communication, Statistical Hypothesis Testing, and Probability Distribution.

- Regression Analysis: Simplify Complex Data Relationships: Approx. 28 hours to complete. SKILLS YOU WILL GAIN: Predictive Modelling, Statistical Analysis, Python Programming, Effective Communication, and regression modeling.

- The Nuts and Bolts of Machine Learning: Approx. 33 hours to complete. SKILLS YOU WILL GAIN: Predictive Modelling, Machine Learning, Python Programming, Stack Overflow, and Effective Communication.

- Google Advanced Data Analytics Capstone: Approx. 9 hours to complete. SKILLS YOU WILL GAIN: Executive Summaries, Machine Learning, Python Programming, Technical Interview Preparation, and Data Analysis.

It could be the perfect way to take your skills to the next level! When it comes to investing, there’s no better investment than investing in yourself and your education. Don’t hesitate – go ahead and take the leap. The benefits of learning and self-improvement are immeasurable.

You may also like:

Check out the table of contents for Product Management and Data Science to explore these topics further.

Curious about how product managers can utilize Bhagwad Gita’s principles to tackle difficulties? Give this super short book a shot. This will certainly support my work.